Institutions now face a critical decision: remote exam proctoring software vs human proctoring.

Higher-education leaders, certification bodies, and corporate L&D teams must select methods that protect integrity without harming candidates. Consequently, every choice carries financial, legal, and reputational stakes.

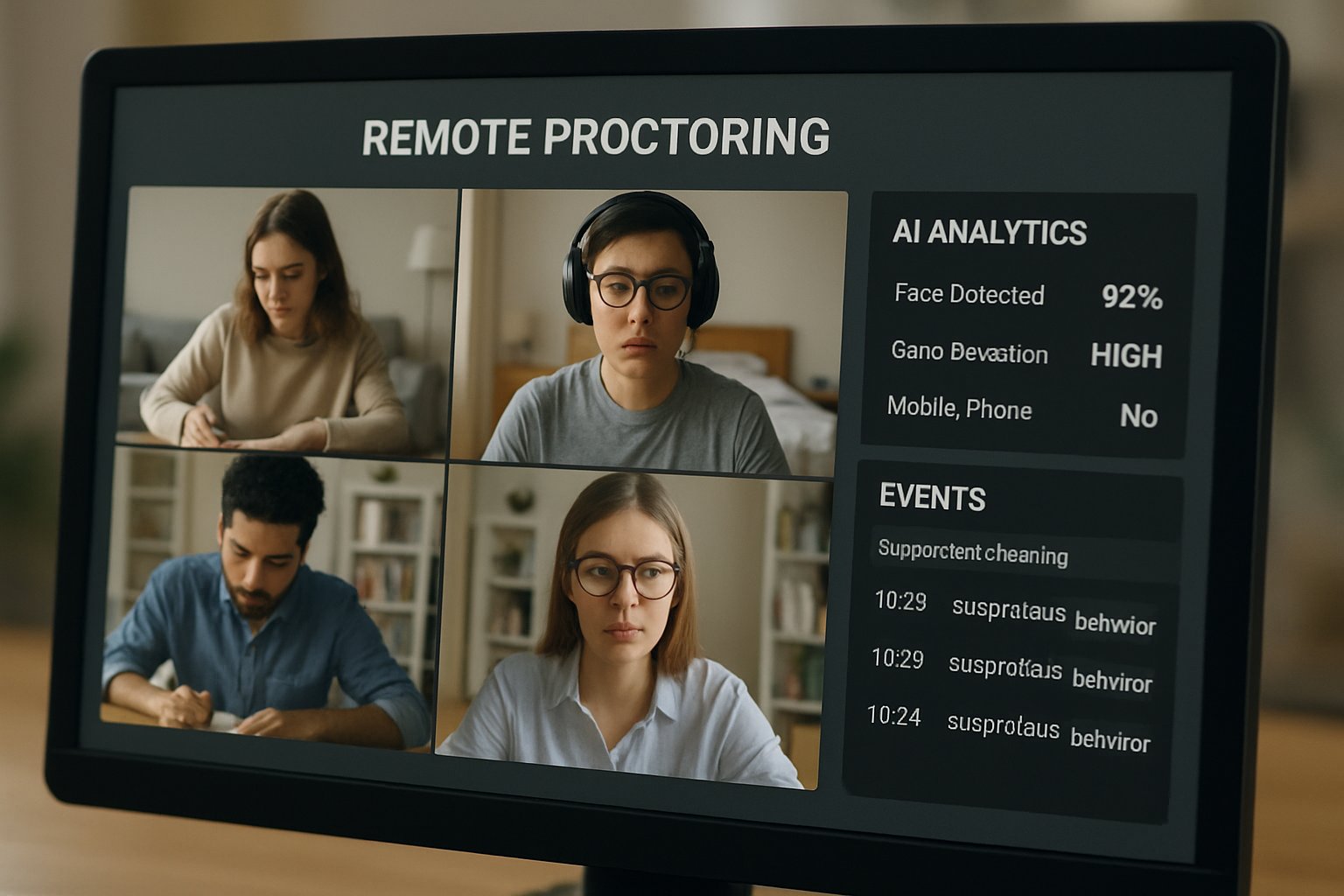

This guide compares live remote proctors and AI remote exam proctoring. It also explains how automated exam proctoring software, automated cheating detection, and hybrid proctoring model options shape 2025 strategy.

Remote Exam Proctoring Software vs Human Proctoring

The market conversation still starts with the phrase remote exam proctoring software vs human proctoring. Decision-makers weigh privacy, cost, and cultural acceptance when they evaluate both approaches.

Global adoption of AI remote exam proctoring surged during the pandemic. However, recent lawsuits over biometric data and bias keep human oversight relevant.

Meanwhile, students demand fair, low-stress testing. Therefore, institutions increasingly test a hybrid proctoring model that combines automated cheating detection with real-time intervention by live remote proctors.

Key takeaway: neither model stands alone. Transitional planning remains essential.

Market Shift Drivers Today

Research firm MRFR estimates the remote proctoring market could reach USD 8.2 billion by 2035. Rapid growth stems from online program expansion and corporate reskilling mandates.

AI remote exam proctoring appeals because it scales. Automated exam proctoring software can monitor thousands simultaneously, cutting per-exam costs to mere dollars.

Furthermore, hybrid proctoring model offerings reduce manual labor while preserving human judgment for flagged cases. Vendors promote automated cheating detection accuracy claims above 95%, yet few peer-reviewed audits confirm such numbers.

Nevertheless, several universities report comparable scores between remote and in-person testing, reinforcing confidence in AI exam monitoring.

Key takeaway: scale and flexibility drive adoption. Yet verification of vendor claims remains vital.

Transition: Next, examine direct cost comparisons.

Costs And Scalability Factors

Price differentials remain stark. Automated exam proctoring software averages USD 0.50–5.00 per session. Live remote proctors cost USD 8–30 per candidate hour.

Consequently, large open-enrollment courses favor AI exam monitoring. In contrast, specialty licensure boards sometimes retain campus staff because error tolerance is low.

Hybrid proctoring model agreements often deliver middle-ground pricing, blending automated cheating detection with short expert reviews. Institutions negotiate volume discounts and multi-year clauses for stability.

Moreover, scalability involves more than dollars. Automated tools need bandwidth, device compatibility, and quick help desks. Live remote proctors require careful scheduling across time zones.

Key takeaway: cost gaps favor automation, but hidden technical costs can erode savings.

Transition: Cost is only one piece. Fairness and trust decide long-term success.

Integrity And Fairness Debates

Critics argue AI remote exam proctoring flags neurodivergent or dark-skinned students more often. Studies in the International Journal for Educational Integrity confirm heightened anxiety under webcam surveillance.

Furthermore, advocacy groups highlight retention of biometric data without clear consent. Regulators in Ontario and several U.S. states have opened investigations.

Key Live Proctoring Strengths

Live remote proctors read context, support accommodations quickly, and calm anxious testers. Therefore, high-stakes exams still rely on human eyes despite higher costs.

Automated Proctoring Limitations Today

Automated cheating detection struggles with lighting, facial coverings, and cultural communication differences. Moreover, AI exam monitoring can miss sophisticated collusion that occurs off-camera.

Key takeaway: ethical and technical gaps persist. Transparent review workflows and hybrid proctoring model designs mitigate risk.

Transition: Accuracy questions deserve a deeper look.

Technology Accuracy Questions Raised

Vendors tout advanced computer-vision pipelines and behavioral analytics. However, an arXiv 2022 study found evasion tactics fooled several systems within minutes.

Additionally, a 27,115-candidate medical study showed score parity, yet detection accuracy was not disclosed. That gap worries compliance officers.

AI remote exam proctoring improves yearly through model retraining, but bias audits remain rare. Automated exam proctoring software needs diverse training data to lower false positives in gaze or face detection.

Meanwhile, live remote proctors can also err due to fatigue. Therefore, instituting layered safeguards—such as automated cheating detection followed by rapid human confirmation—boosts confidence.

Key takeaway: accuracy claims require independent validation before procurement.

Transition: How should leaders choose the best solution?

Choosing Best Proctoring Approach

Successful institutions follow a structured checklist before signing contracts:

- Align assessment stakes with monitoring depth and live remote proctors availability.

- Audit vendor data retention, AI exam monitoring bias reports, and security certifications.

- Pilot automated exam proctoring software with diverse participant groups to gauge false-flag rates.

- Budget for support teams, hybrid proctoring model infrastructure, and accommodation workflows.

- Establish transparent appeals and clear student communication to reduce stress.

Moreover, many universities now maintain internal steering committees that compare remote exam proctoring software vs human proctoring performance metrics each semester.

Consequently, policies evolve as technology matures and regulations tighten.

Key takeaway: data-driven, inclusive pilots clarify the right blend of automation and live remote proctors.

Transition: The final section summarizes lessons and presents a trusted partner.

Conclusion And Next Steps

The debate around remote exam proctoring software vs human proctoring remains nuanced. Leaders must balance cost, scale, accuracy, and candidate wellbeing. AI remote exam proctoring, automated exam proctoring software, and hybrid proctoring model solutions now dominate large programs, while live remote proctors still secure the highest-stakes tests.

Why Proctor365? Our platform merges AI exam monitoring with experienced live remote proctors, delivering automated cheating detection, advanced identity verification, and global scalability. Trusted by exam bodies worldwide, Proctor365 ensures integrity without compromising fairness. Explore how remote exam proctoring software vs human proctoring becomes seamless synergy at Proctor365.ai.

Frequently Asked Questions

- What distinguishes remote exam proctoring software from human proctoring?

Remote exam proctoring uses AI and automated cheating detection to monitor many candidates, while human proctors provide contextual support. Hybrid models combine both to ensure robust fraud prevention and identity verification. - How does hybrid proctoring enhance exam security and fairness?

Hybrid proctoring blends automated exam monitoring with live proctor oversight, reducing false flags while ensuring prompt human intervention. This integrated approach supports effective fraud prevention, accurate identity verification, and fair candidate accommodations. - How does Proctor365 ensure exam integrity and candidate fairness?

Proctor365 merges advanced AI exam monitoring with experienced live remote proctors, delivering automated cheating detection and robust identity verification. This blend secures exam integrity, prevents fraud, and maintains a fair testing environment. - What are the cost benefits of AI proctoring compared to traditional human proctors?

AI remote exam proctoring scales efficiently at a lower per-exam cost. When complemented with human oversight, it offers a cost-effective solution that balances affordability with the thorough fraud prevention and identity verification needed for high-stakes exams.